In Search of Speed

As I mentioned in a previous post, saying that my HDD’s volume group was on /dev/sdb1 is not quite true. Although I wanted to have a large scratch space for virtual machines and renderings, I did not want sacrifice too much on speed. Both of those operations benefit from faster disks. The cost of ssds in the 512GB - 1TB range is quite prohibitive, so I sought a compromise.

RAID

Striping

I began to investigate RAID solutions. Of course there is the obvious RAID 0 array, but I have never been a fan of striping or tying two disks together in a non-redundant way. RAID 0 doubles your risk of disk failure and makes your setup more complicated. I guess I am uneasy with it because one time back in about 2002 I got a new Seagate drive and rather than making it a new E: drive, I extended my existing volume onto it. About 2 months later, the drive started to fail and I had a very difficult time getting it out of the volume without losing all my data. Having a bad drive take out a good one is maddening.

Hardware vs Software

Before I got much further, I realized I would have to answer the question of hardware or software raid. After a quick bit of googling, some ServerFault questions and a couple of blogs, I decided on software. This blog post had a lot to do with convincing me:

http://www.chriscowley.me.uk/blog/2013/04/07/stop-the-hate-on-software-raid/

Mirroring

At this point I started to look into RAID 1 (mirroring) and btrfs. I quickly discarded the idea of btrfs because I am running Kubuntu 12.04 LTS and everything I read said I should be using a more recent kernel. I am willing to patiently wait for it to come to me in the next LTS release.

What I found regarding Linux software RAID 1 is kind of surprising. All over the web you can find benchmarks that show, counter-intuitively, that RAID 1 is not any faster that a single disk. I am only referring to reads, as it is obvious that since you write to both disks it cannot be faster in that regard. It seems most people assume, like I did, that Linux software RAID 1 would read from both disks in parallel and therefore reads could peak out at 2x.

After a little more investigate, I found out that because the data is not striped, Linux only loads data from a single disk for an individual read operation. It will use both disks in parallel for multiple read operations. So for a single large file, it will read at 1x. For two large files it can read up to 2x.

RAID 5

Since I was going for speed, that meant RAID 1 was out. With that, I started looking at RAID 5. The immediate problem with RAID 5 is the cost. With a minimum of 3 disks, the smallest array possible already costs more than a 256GB SSD. I need more than 256GB, but any solution that approaches the cost of a 512GB SSD would favour the SSD.

Unfortunately, RAID 5 with three disks has pretty dismal write speeds. Although I am mainly focusing on reads, I would like to keep the write speeds up too. I believe that adding another disk to the array would increase both the read and write speeds, but at four 1TB drives you are smack dab in the 512GB SSD price range. I would certainly have more disk space but I honestly do not need 3TB. Finally, RAID 5 suffers from long rebuild times during which, other drives can fail.

RAID 10

Enter RAID 10 to the picture. RAID 10 is striping over a mirrored set. Not to be confused with mirroring over striped sets. RAID 10 is fast. Reads are akin to striping and writes go at the speed of one disk. Also, rebuild times are much faster than RAID 5. Here come the downsides though. Since the disks are in a mirrored configuration, you lose 50% of the total disk capacity. Second, it requires a minimum of four disks. I don’t care about the lost disk space; I doubt I would have used it anyway. But the cost issue raising its head again was disheartening.

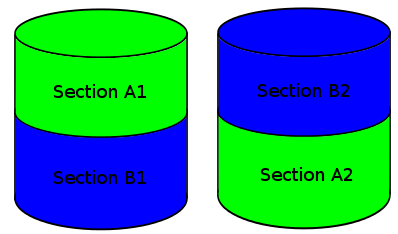

The whole thing began to bug me at this point. Why did RAID 1 not get the read speeds of RAID 10 (note: I hadn’t found the reason at this point)? Why did RAID 10 require four disks? I kept thinking about it and I was sure that there should be a way to configure two disks into something like a RAID 10 array that would get the same read speeds. I realized that you should be able to segment the drives into two sections each. You could mirror the data of the section of the first disk to one of the second and vice versa. You should then be able to mirror the sets.

Tasty Cake

That is when the light bulb went off. I had seen something like this when I was reading about all the Linux software raid types. I went searching again and found my holy grail:

Linux kernel software RAID 1+0 f2 layout

Aka RAID 10 far layout (with two sections). It builds a RAID 10 array over two disks.

With this setup, the read speeds are similar to striping and the write speeds are just slightly slower than a single disk.

It turns out you can build these things in a bunch of crazy ways. You can specify the number of disks (k), copies of the data (n), and sections (f). So you could have 2 copies over 3 disks, 3 copies over 4 disk with 3 sections, etc…

Once again, software raid shows its value. Without the constraints that hardware enforce, you are free to setup your system as you please.

Epilog

I have also summarized this post as an answer to a question on serverfault: https://serverfault.com/questions/158168/slow-software-raid?rq=1

In my next post I am going to talk about performance testing my setup and a few surprises I found.

Be mindful of hard errors which are the reason that RAID no longer lives up to its original promise.

ReplyDeleteIf one of the drives fails, then during the rebuild if you get an error - the entire array will die.

http://www.zdnet.com/article/why-raid-5-stops-working-in-2009/

SATA drives are commonly specified with an unrecoverable read error rate (URE) of 10^14. Which means that once every 12.5 terabytes, the disk will not be able to read a sector back to you.

http://www.lucidti.com/zfs-checksums-add-reliability-to-nas-storage

That's interesting. I read the first article in the course of investigating my initial setup. I recall reading that higher capacity drives were now coming with a 10^15 URE rating. However, I just checked and the drives I have only have a 10^14 rating.

DeleteHowever, I am not sure that it's an issue with mirrored drives like it is with RAID-5. I could be mistaken but my understanding is that it's only an issue because of the parity calculation and the fact that a drive, by itself, isn't readable. That is not a problem with mirroring.

Furthermore, I still feel that my setup gives me an edge over a single drive. If you only have one drive, and it fails, you're done. With my RAID 10 f2 setup, I at least have a chance of recovering. And since I'm only using < 666 GB, I still only have, worst case, a 1 in 24 probability of a URE.

But this highlights another advantage of Linux software RAID. Namely, you could do an f2, n3 layout over 3 disks. You would have three copies of the data on three disks, with a far layout (giving you the striping speed advanage). It would sacrifice more storage than a 3 disk RAID-5 setup, but would have faster rebuilds, more redundancy and a very low probability of a URE failure.